TL;DR

In today's tech industry, setting up a CI/CD pipeline is quite easy. Creating a CI/CD pipeline even for a simple side project is a great way to learn many things. For today we will be working on one of my side projects using Portainer, Gitlab and Docker for the setup.

My sample project

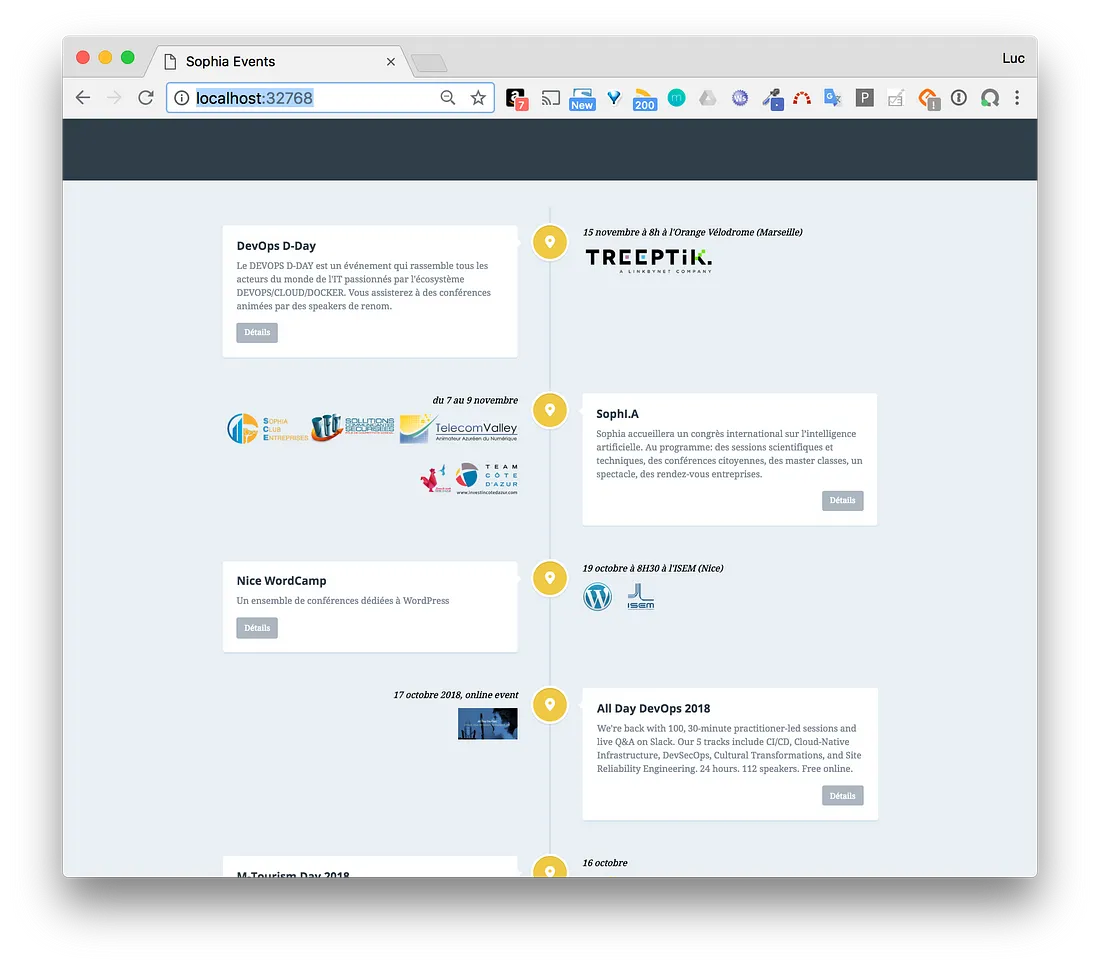

As the founder of Apoti Development Association (A.D.A.) an NGO, I like organizing technical events in the Buea area (SW Region of Cameroon, Africa). I was frequently asked if there is a way to know all the upcoming events (the meetups, the Jugs, the ones organized by the local associations, etc.) After taking some time to look into it, I realized there was no single place which listed them all. So I came up with https://apotidev.org/events, a simple web page which tries to keep an update of all the events. This project is available in Gitlab.

Disclaimer: Even though this is a simple project, the complexity of this project is not important here. The different components of our CI/CD pipeline we will detail can also be used in almost the same way for more complicated projects. However they are a nice fit for micro-services.

A look at the code

To make things as simple as possible, we have an events.json file in which all new events are added. Let's look at a snippet of it

{

"events": [

{

"title": "Let's Serve Day 2018",

"desc": "Hi everyone! We're back with 50, 60-minute practitioner-led sessions and live Q&A on Slack. Our tracks include CI/CD, Cloud-Native Infrastructure, Cultural Transformations, DevSecOps, and Site Reliability Engineering. 24 hours. 112 speakers. Free online.",

"date": "October 17, 2018, online event",

"ts": "20181017T000000",

"link": "https://www.alldaydevops.com/",

"sponsors": [{"name": "all-day-devops"}]

},

{

"title": "Creation of a Business Blockchain (lab) & introduction to smart contracts",

"desc": "Come with your laptop! We invite you to join us to create the first prototype of a Business Blockchain (Lab) and get an introduction to smart contracts.",

"ts": "20181004T181500",

"date": "October 4 at 6:15 pm at CEEI",

"link": "https://www.meetup.com/en-EN/IBM-Cloud-Cote-d-Azur-Meetup/events/254472667/",

"sponsors": [{"name": "ibm"}]

}

…

]

}

Our mustache template is applied to this file. It will help us to generate the final web assets.

Docker multi-stage build

Once our web assets have been generated, they are copied into an nginx image — the image that is deployed on our target machine.

Thanks to Gitlab's multi-stage build, our build is in two parts:

creation of the assets

generation of the final image containing the assets

Let's look at the Dockerfile used for the build

# Generate the assets

FROM node:8.12.0-alpine AS build

COPY . /build

WORKDIR /build

RUN npm i

RUN node clean.js

RUN ./node_modules/mustache/bin/mustache events.json index.mustache > index.html

# Build the final Docker image used to serve them

FROM nginx:1.14.0

COPY --from=build /build/*.html /usr/share/nginx/html/

COPY events.json /usr/share/nginx/html/

COPY css /usr/share/nginx/html/css

COPY js /usr/share/nginx/html/js

COPY img /usr/share/nginx/html/img

Local testing

Before we proceed, we need to test the generation of our site. Just clone the repository and run the test script. This script will create an image and run a container out of it.

# First Clone the repo

$ git clone git@gitlab.com:lucj/ada.events.git

# Next, cd into the repo

$ cd sophia.events

Now let us run our test script

$ ./test.sh

This is what our output looks lke

Sending build context to Docker daemon 2.588MB

Step 1/12 : FROM node:8.12.0-alpine AS build

---> df48b68da02a

Step 2/12 : COPY . /build

---> f4005274aadf

Step 3/12 : WORKDIR /build

---> Running in 5222c3b6cf12

Removing intermediate container 5222c3b6cf12

---> 81947306e4af

Step 4/12 : RUN npm i

---> Running in de4e6182036b

npm notice created a lockfile as package-lock.json. You should commit this file.

npm WARN www@1.0.0 No repository field.added 2 packages from 3 contributors and audited 2 packages in 1.675s

found 0 vulnerabilitiesRemoving intermediate container de4e6182036b

---> d0eb4627e01f

Step 5/12 : RUN node clean.js

---> Running in f4d3c4745901

Removing intermediate container f4d3c4745901

---> 602987ce7162

Step 6/12 : RUN ./node_modules/mustache/bin/mustache events.json index.mustache > index.html

---> Running in 05b5ebd73b89

Removing intermediate container 05b5ebd73b89

---> d982ff9cc61c

Step 7/12 : FROM nginx:1.14.0

---> 86898218889a

Step 8/12 : COPY --from=build /build/*.html /usr/share/nginx/html/

---> Using cache

---> e0c25127223f

Step 9/12 : COPY events.json /usr/share/nginx/html/

---> Using cache

---> 64e8a1c5e79d

Step 10/12 : COPY css /usr/share/nginx/html/css

---> Using cache

---> e524c31b64c2

Step 11/12 : COPY js /usr/share/nginx/html/js

---> Using cache

---> 1ef9dece9bb4

Step 12/12 : COPY img /usr/share/nginx/html/img

---> e50bf7836d2f

Successfully built e50bf7836d2f

Successfully tagged registry.gitlab.com/ada/ada.events:latest

=> web site available on [http://localhost:32768](http://localhost:32768/)

We can now access our webpage using the URL provided at the end

Our target environment

Provisioning a virtual machine on a cloud provider

As you have probably noticed, this web site is not critical (only a few dozen visits a day), and as such it only runs on a single virtual machine. This one was created with Docker Machine on AWS, the best cloud provider.

Given the scale of our project, you must have noticed that it is not that critical (barely a few visits a day), so we will only one one virtual machine for it. For that we created ours with Docker on Exoscale, a nice European cloud provider.

Using Docker swarm

We configured our VM (virtual machine) above so it runs the Docker daemon in Swarm mode so that it would allow us to use the stack, service, secret primitives, config and the great (very easy to use) orchestration abilities of Docker swarm

The application running as a Docker stack

The file below -> ada.yaml defines the service which runs our Nginx web server that contains the web assets.

version: "3.7"

services:

www:

image: registry.gitlab.com/lucj/sophia.events

networks:

- proxy

deploy:

mode: replicated

replicas: 2

update_config:

parallelism: 1

delay: 10s

restart_policy:

condition: on-failure

networks:

proxy:

external: true

Let's break this down

The docker image is in our private registry on gitlab.com.

The service is in replicated mode with 2 replicas, this means that 2 tasks / 2 containers of the service are always running at the same time. A virtual IP address (VIP) will be associated to this service by Docker Swarm. That way, each request targeted at the service is easily load balanced between our two replicas.

Every time that an update is done to our service (like deploying a new version of the website), one of our replicas is updated and the 2nd is updated 10 secs after. This makes sure our website is always available even during the update process.

Our service is also attached to the external proxy network. This makes it so our TLS termination service (which runs in another service which is deployed on docker swarm, but out of our project) can always send requests to our www service.

Our stack is executed with the command:

$ docker stack deploy -c ada.yml ada_events

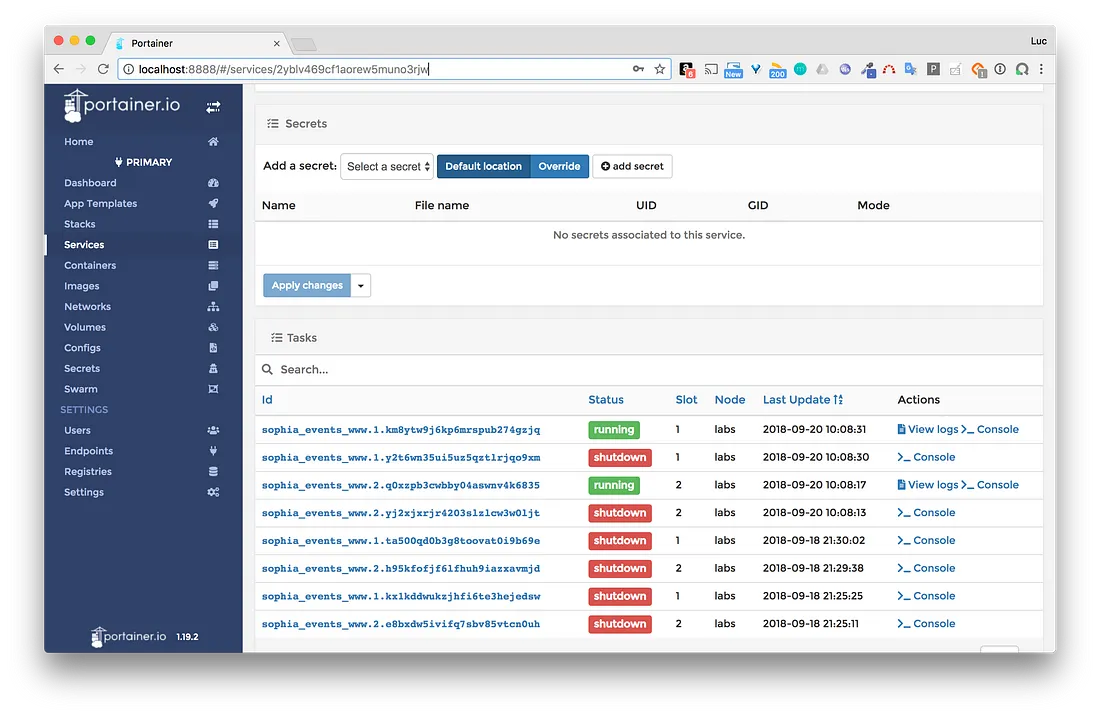

Portainer: One tool to manage them all

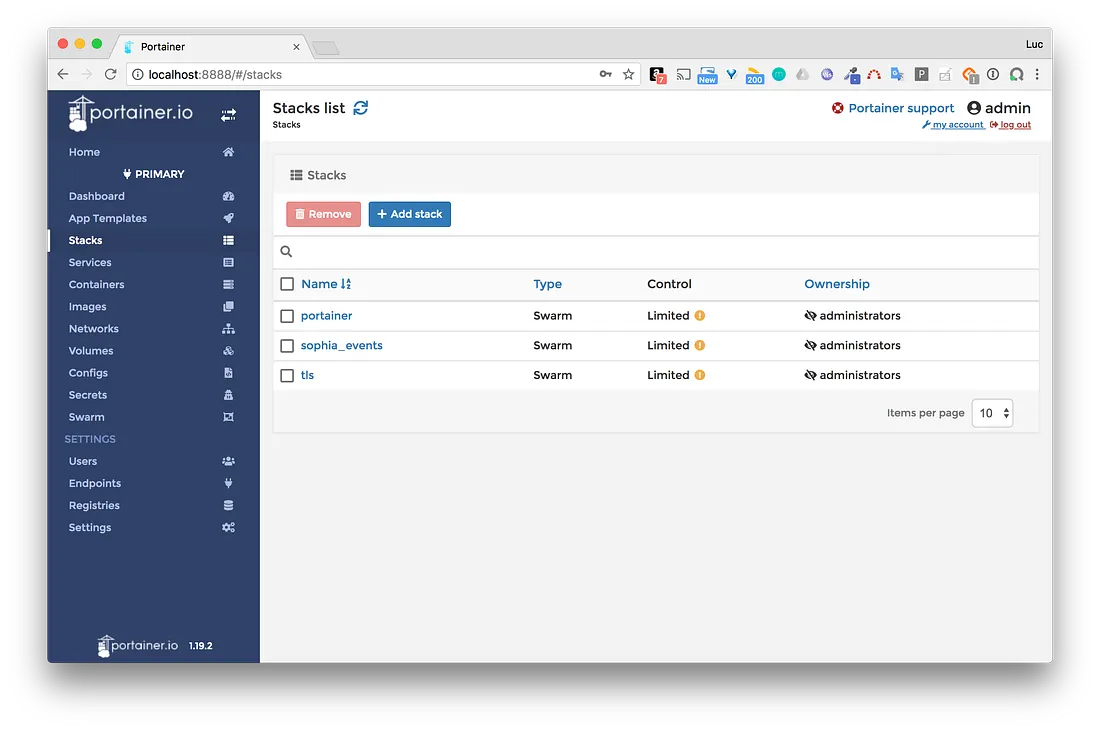

Portainer is a really great web UI that will help us to manage all our Docker hosts and Docker Swarm clusters very easily. Let's take a look at its interface where it lists all our stacks available in the swarm.

As you can see above, our current setup has 3 stacks:

First we have

PortaineritselfThen we have

ada_eventsor in this casesophia_eventswhich contains the service which runs our web siteLast we have

tls, our TLS termination service

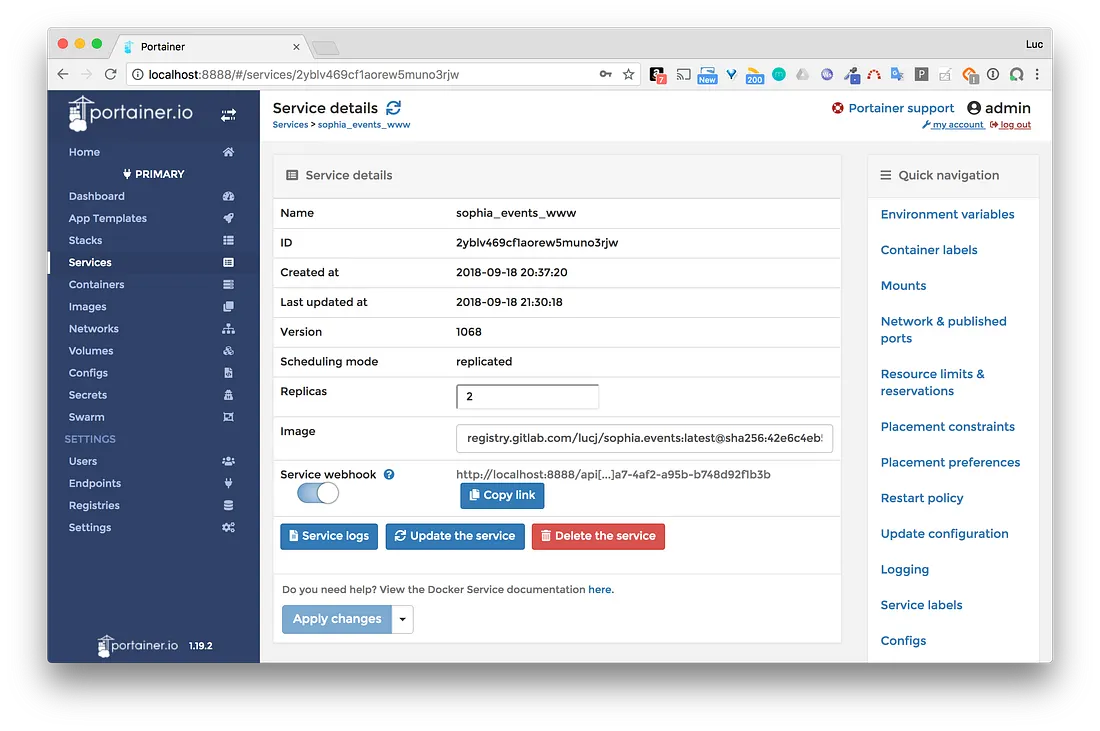

If we go ahead and list the details of the www service, in the ada_events stack, we can easily see that the Service webhook is activated. This is a new feature, available since Portainer version 1.19.2. This update allows us to define a HTTP Post endpoint which we can call to trigger an update of our service. As you will notice later on, our Gitlab Runner is in charge of calling this webhook.

Note: As you see in the screenshot above, I use localhost:8888 to access Portainer. Since I don't want to expose our Portainer instance to the external world, access is done through an ssh tunnel; which we open with the command below

ssh -i ~/.docker/machine/machines/labs/id_rsa -NL 8888:localhost:9000 $USER@$HOST

Once we have done this, all requests targeted at our local machine on port 8888 -> localhost:8888 are sent to port 9000 on our VM through ssh. Port 9000 is the port where Portainer is running on our VM but this port is not opened to the outside world. We used a security group in our AWS config to block it.

NB: Note that in the command above, the SSH key that was used to connect to the VM was the one generated by our Docker Machine during the creation of the VM.

GitLab runner

A Gitlab runner is a continuous integration tool that helps automate the process of testing and deploying any and all applications. It works with GitLab CI to run any job defined in the project's .gitlab-ci.yml file.

So in our project, our GitLab runner is in charge of executing all the actions we defined in the .gitlab-ci.yml file. On Gitlab, you have a choice of using your own runners or the shared runners available. In this project, we used a VM on AWS is our runner.

First, we register our runner by providing a couple of commands:

CONFIG_FOLDER=/tmp/gitlab-runner-config

$ docker run — rm -t -i \

-v $CONFIG_FOLDER:/etc/gitlab-runner \

gitlab/gitlab-runner register \

--non-interactive \

--executor "docker" \

—-docker-image docker:stable \

--url "[https://gitlab.com/](https://gitlab.com/)" \

—-registration-token "$PROJECT_TOKEN" \

—-description "AWS Docker Runner" \

--tag-list "docker" \

--run-untagged \

—-locked="false" \

--docker-privileged

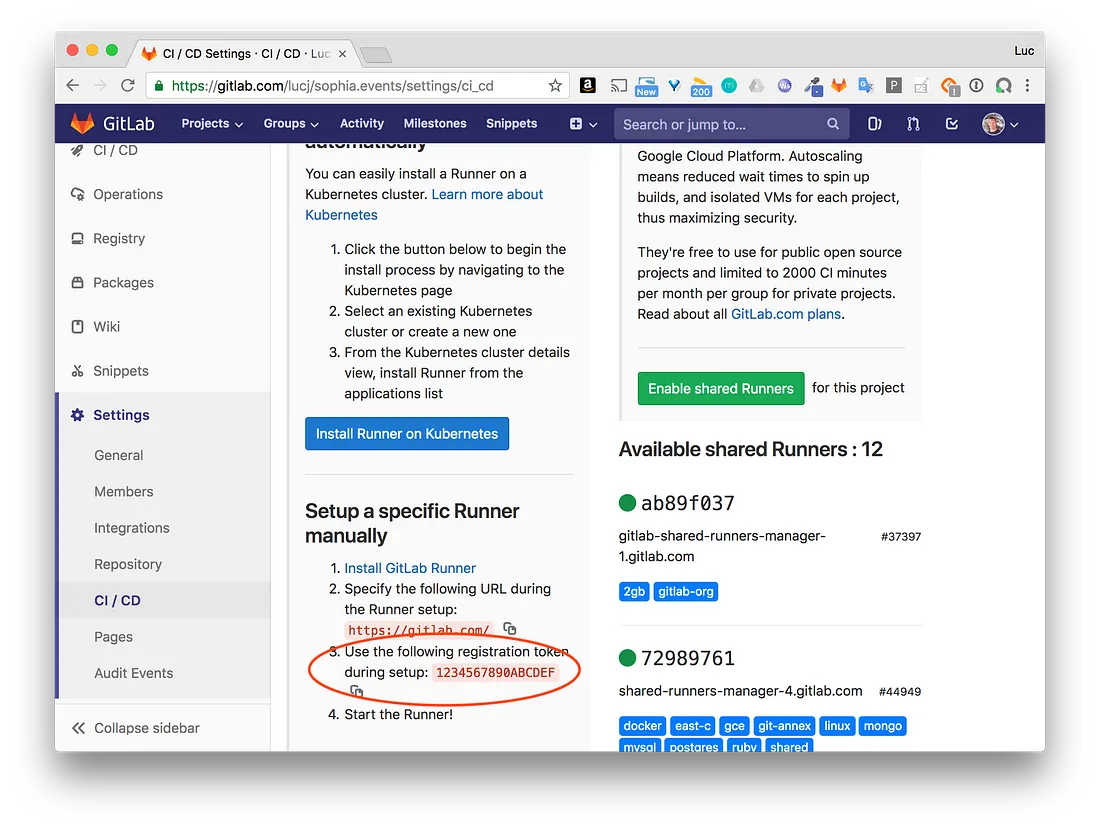

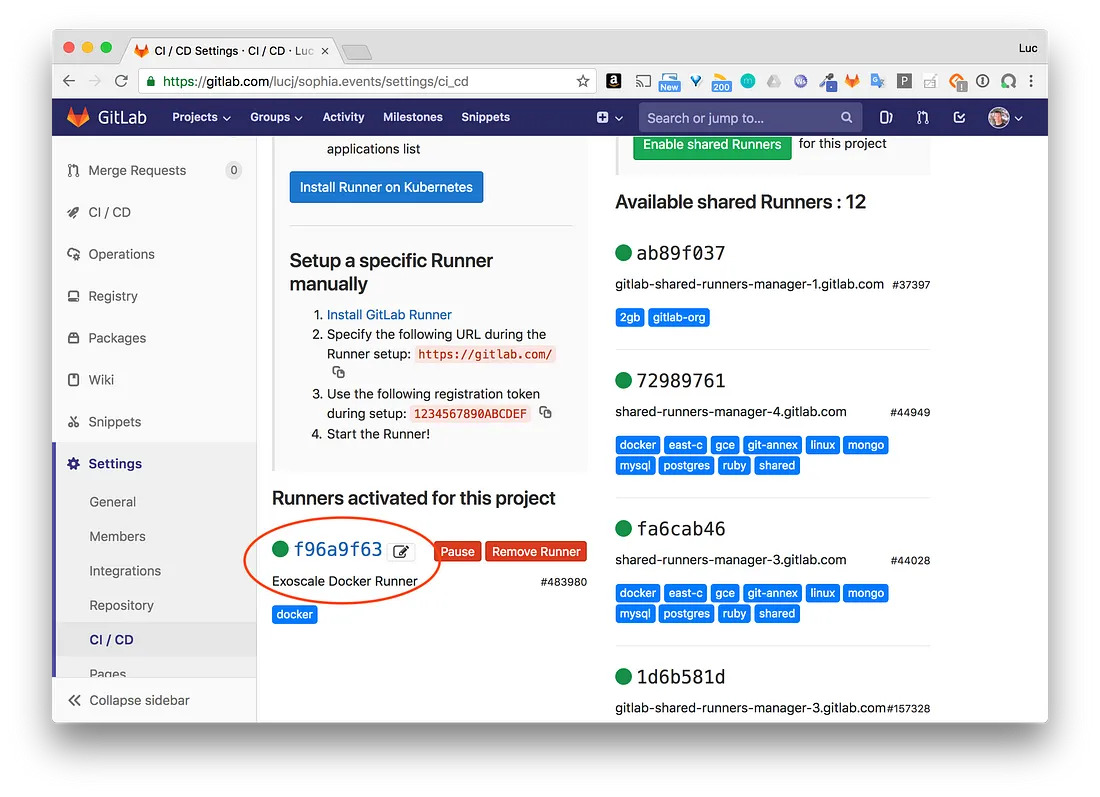

As you see above, we have $PROJECT_TOKEN as one of the needed options. We will get that from the project page on Gitlab, used to register new runners

Once we have registered our GitLab runner, we can now start it

CONFIG_FOLDER=/tmp/gitlab-runner-config

$ docker run -d \

--name gitlab-runner \

—-restart always \

-v $CONFIG_FOLDER:/etc/gitlab-runner \

-v /var/run/docker.sock:/var/run/docker.sock \

gitlab/gitlab-runner:latest

Once our VM has been setup as a Gitlab runner, it will be listed in the CI/CD page, under settings of our project

Now that we have a runner, it can now receive work to do every time that we have a new commit and push in our git repo. It will sequentially run the different stages in our .gitlab-ci.yaml file. Let's look at our .gitlab-ci.yaml file -> the file that configures our Gitlab CI/CD pipeline

variables:

CONTAINER_IMAGE: registry.gitlab.com/$CI_PROJECT_PATH

DOCKER_HOST: tcp://docker:2375

stages:

- test

- build

- deploy

test:

stage: test

image: node:8.12.0-alpine

script:

- npm i

- npm test

build:

stage: build

image: docker:stable

services:

- docker:dind

script:

- docker image build -t $CONTAINER_IMAGE:$CI_BUILD_REF -t $CONTAINER_IMAGE:latest .

- docker login -u gitlab-ci-token -p $CI_JOB_TOKEN registry.gitlab.com

- docker image push $CONTAINER_IMAGE:latest

- docker image push $CONTAINER_IMAGE:$CI_BUILD_REF

only:

- master

deploy:

stage: deploy

image: alpine

script:

- apk add --update curl

- curl -XPOST $WWW_WEBHOOK

only:

- master

Let's break down the stages

First, the test stage starts by running some pre-checks ensuring that the

events.jsonfile is well formed and also makes sure that there is no images missing..Next, the build stage uses docker to build the image and then pushes it to our GitLab registry.

Lastly, the deploy stage triggers the update of our service via a

webhooksent to our Portainer app.

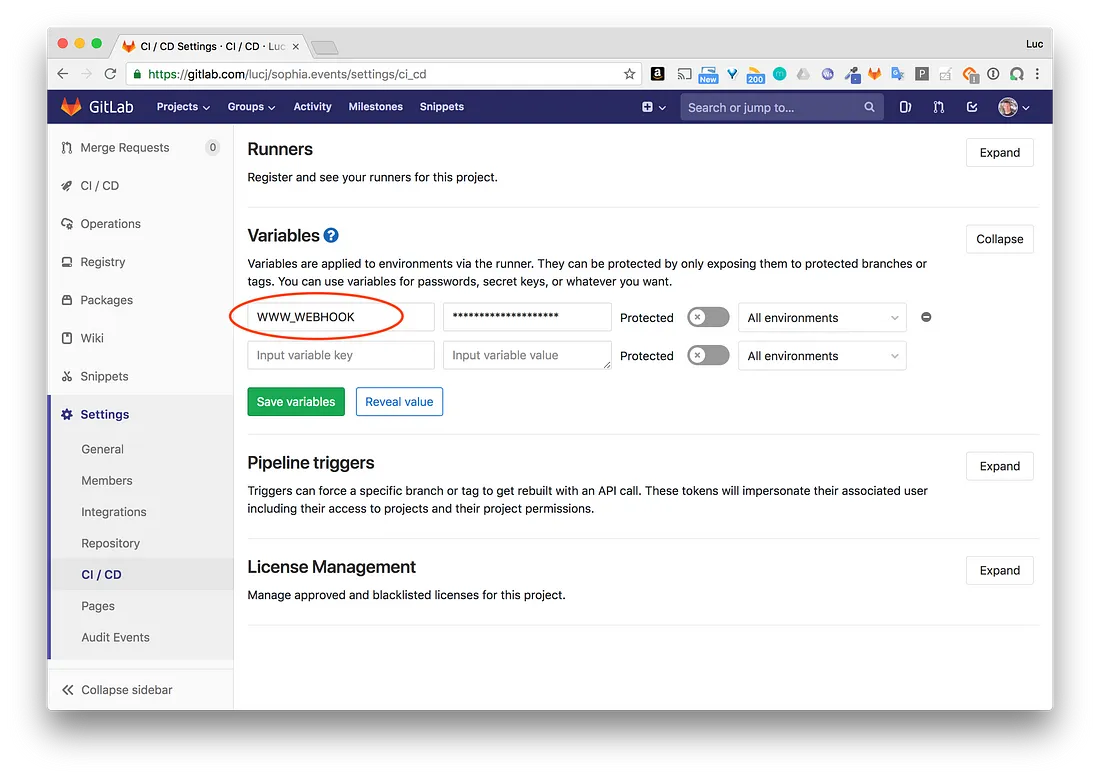

Note that the

WWW_WEBHOOKvariable is defined in the CI/CD settings of our project page on GitLab.com.

Some Notes

Our Gitlab runner is running inside a container in our Docker swarm. Like mentioned before, we could have instead used a shared runner (publicly available runners which share their time between the jobs needed by different projects hosted on GitLab) — but, in our case, since the runner must have access to our Portainer endpoint (to send the webhook), and also because I don't want our Portainer app to be publicly accessible, I preferred setting it this way, having it run inside the cluster. It is also more secure this way.

In addition to that, because our runner is in a docker container, it is able to send the webhook to the IP address of the Docker0 bridge network, to connect with Portainer through port 9000, which it exposes on the host. Thus, our webhook has following format: 172.17.0.1:9000/api[…]a7-4af2-a95b-b748d92f1b3b

The Deployment

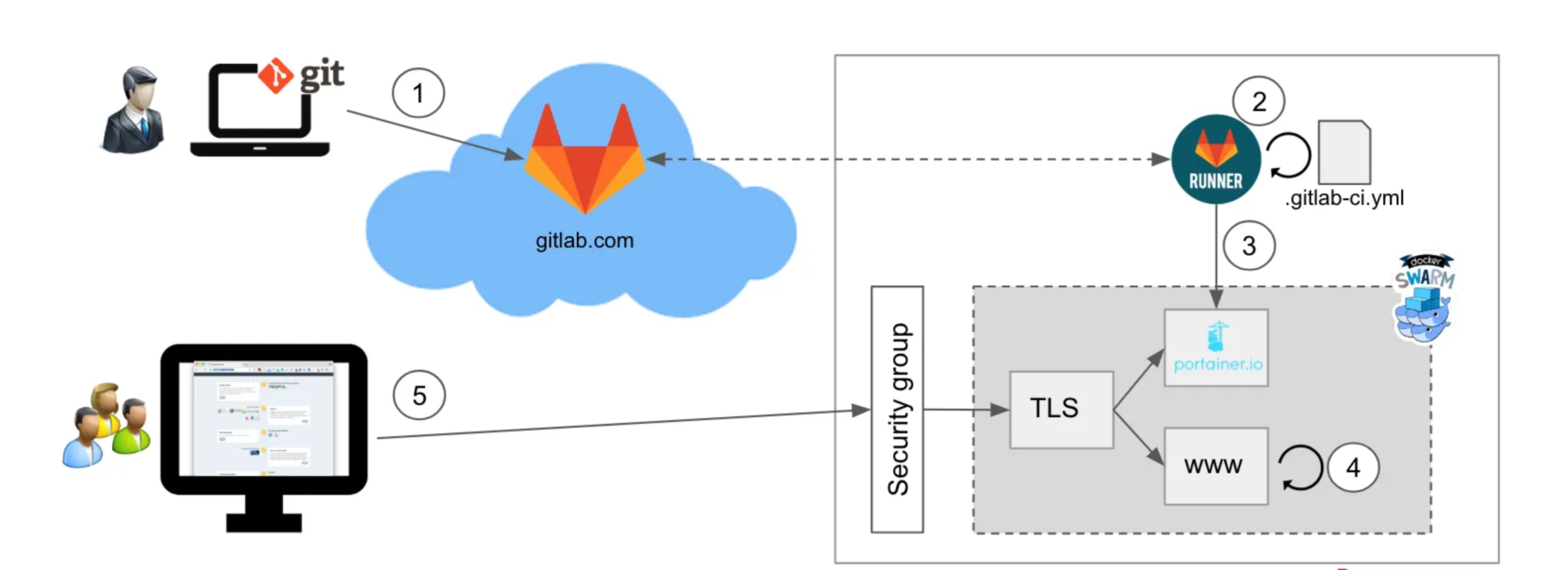

Updating a new version of our app follows the workflow below

It starts with a developer pushing some changes to our GitLab repo. The changes in the code mainly involve adding or updating one or more events in our

events.jsonfile while also adding some sponsors' logo.After this, the GitLab runner performs all the actions that we defined in the

.gitlab-ci.ymlfile.Then the GitLab runner calls our webhook that is defined in Portainer.

Finally, upon the webhook reception, Portainer deploys the newest version of the www service. It does this, calling the Docker Swarm API. Portainer can access to the API because the

/var/run/docker.socksocket is bind mounted once it is started.Now our users can access the newest version of our events website.

Let's Test

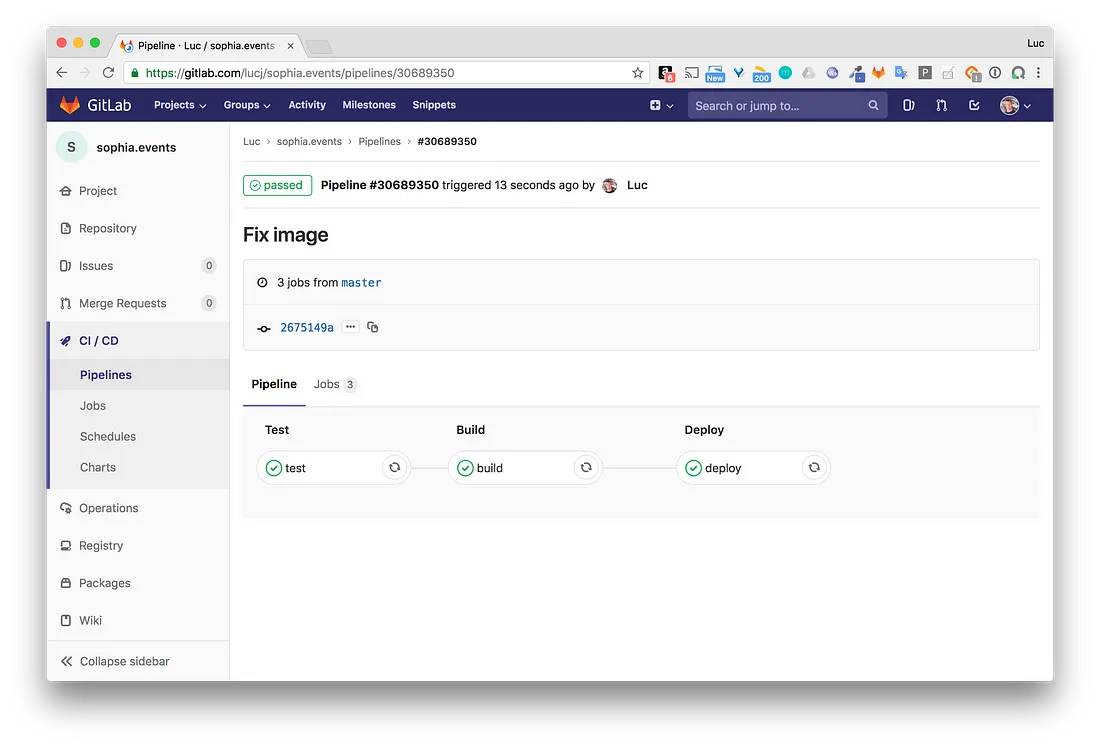

Let's test our pipeline by doing a couple changes in the code and then committing / pushing those changes.

$ git commit -m 'Fix image'

$ git push origin master

As you can see in this screenshot below, our changes triggered our pipeline

Breaking down the steps

On Portainer side, the webhook was received and the service update was performed. Also, although we cannot see it clearly here, one replica has been updated. Like we mentioned before, that still left the website accessible through the other replica. The other replica also was updated a couple of seconds later.

Summary

Although this was a tiny project, setting up a CI/CD pipeline for it was a good exercise. First it helped me get more familiar with GitLab (which has been on my To-Learn list for quite some time). Having done this project, I can say that it is an excellent, professional product. Also, this project was a great opportunity for me to play with the long awaited webhook feature available in updated versions of Portainer. Lastly, choosing to use Docker Swarm for this project was a real no-brainer - so cool and easy to use!

Hope you found this project as interesting as I did. No matter how small your project is, it would be a great idea to build it using CI/CD.

What projects are you working on and how has this article inspired you? Please comment below.