Table of contents

- Target infrastructure

- Introduction to Terraform

- Local setup

- Google Cloud Platform access

- GCP Service account (or gcloud CLI as alternative)

- Install Cloud SDK & Terraform CLI

- Create infrastructure with Terraform

- Setup repository

- Alternative to using Service Account key

- Network

- Bastion instance

- Kubernetes Cluster

- Creating the infrastructure

- Additional resources

In this article, I want to share how I approached creating a private Kubernetes (GKE) cluster in Google Cloud Platform (GCP).

Target infrastructure

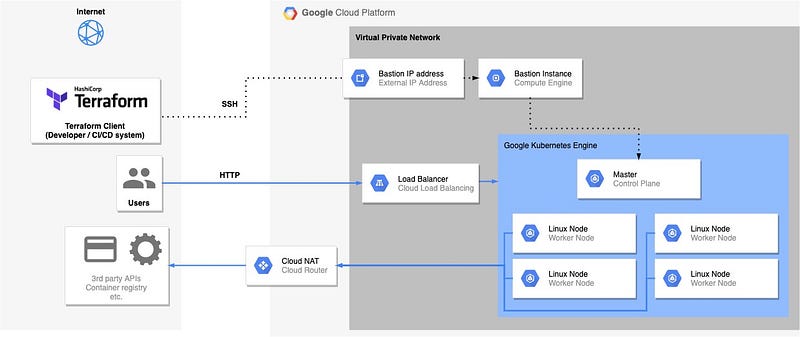

To get an overview — this is the target infrastructure we’re aiming for:

A GKE cluster with Linux Worker Nodes.

Load Balancer that routes external traffic to the Worker Nodes.

A NAT router that allows all our instances inside the VPC to access the internet.

A Bastion Instance that allows us to access the Kubernetes Control Plane to run

kubectlCLI commands. This is basically just a Linux machine with a proxy installed on it. That is exposed to the internet via an external IP address.

GCP infrastructure with a private GKE cluster, created with draw.io

Introduction to Terraform

To get started with Terraform, I found the HashiCorp tutorials useful:

The Terraform Language Documentation (Reference)

There’s also lots of other resources available.

Local setup

Google Cloud Platform access

To be able to create resources in the Google cloud, a Google account is needed first. GCP offers a $300 credit with a trial period of a month (at time of writing). You can sign up for this at https://cloud.google.com/.

GCP Service account (or gcloud CLI as alternative)

We need to authenticate with GCP. Go to https://console.cloud.google.com/identity/serviceaccounts and create a service account. This will grant access to the GCP APIs.

After creating the service account. Create a JSON key for it and download it locally.

Install Cloud SDK & Terraform CLI

To be able to run Terraform locally. The GCP & Terraform CLI needs to be installed.

Create infrastructure with Terraform

You can find all the files on GitHub.

Setup repository

Clone the Git repository

https://github.com/apotitech/terraform-gcp-gke-infrastructure-main.git

Move the service account JSON key to infrastructure/service-account-credentials.json

Configure variables:

cp ./infrastructure/terraform.tfvars.example ./infrastructure/terraform.tfvars

Change the project_id & service_account values to the GCP project & service account mail address.

Alternative to using Service Account key

Alternatively to the Service Account. You can use the gcloud CLI tool. You can find the installation instructions for it here.

Then you can log in using gcloud auth login . After that. Store the OAuth access token that Terraform uses in the required environment variable:

export GOOGLE_OAUTH_ACCESS_TOKEN=$(gcloud auth print-access-token)

Keep in mind that this token is only valid for 1 hour (default).

Now let’s get to the actual Terraform code:

Network

infrastructure/main.tf

The terraform and provider blocks are needed to configure the GCP Terraform provider.

The google_network module is a local module located inside the ./networks directory. This module defines the network resources we need:

infrastructure/networks/main.tf

This creates a VPC and subnet. And the NAT router that allows the instances inside our VPC to communicate with the internet.

Note: The IP ranges defined in the locals block that will be used for the GKE cluster may not overlap with the subnet’s CIDR range. I defined these in the networking module to have all the networking related things in one place. They’ll be exposed as output variables and used by the kubernetes_cluster module.

Bastion instance

This module creates a virtual machine that runs a proxy. This will allow us to access the Kubernetes Control Plane from outside the GCP network, and run kubectl commands.

infrastructure/main.tf

...

module "bastion" {

source = "./bastion"

project_id = var.project_id

region = var.region

zone = var.main_zone

bastion_name = "app-cluster"

network_name = module.google_networks.network.name

subnet_name = module.google_networks.subnet.name

}

...

infrastructure/bastion/main.tf

This will also output two commands.

The content of the ssh output can be used to open an SSH tunnel to the Bastion instance.

kubectl_command can be used to run kubectl and use the Bastion instance as proxy.

infrastructure/bastion/outputs.tf

Kubernetes Cluster

Creates a GKE Kubernetes cluster and Linux nodes inside the previously created network.

infrastructure/main.tf

infrastructure/kubernetes_cluster/main.tf

Creating the infrastructure

This will initialize Terraform.

cd infrastructure

terraform init

Next- create the infrastructure using the Terraform configuration.

terraform apply

The command will list all the GCP components Terraform will create. Accept by typing yes in the terminal. This will create all the infrastructure inside GCP, and take a few minutes.

Additional resources

Some resources that were useful to me:

https://medium.com/@DazWilkin/gkes-cluster-ipv4-cidr-flag-69d25884a558

https://cloud.google.com/kubernetes-engine/docs/how-to/private-clusters

https://github.com/GoogleCloudPlatform/gke-private-cluster-demo

That’s all folks. Hope this article has been helpful to you.

For more on version control and DevOps, please read our other articles.

#YouAreAwesome #StayAwesome